- INSTALL APACHE SPARK ON REDHAT WITHOUT SUDO HOW TO

- INSTALL APACHE SPARK ON REDHAT WITHOUT SUDO INSTALL

- INSTALL APACHE SPARK ON REDHAT WITHOUT SUDO DOWNLOAD

Tags Apache Spark, Debian Tips, Ubuntu Tips Post navigation We will catch you with another interesting article very soon. You can also check if spark-shell works fine by launching the spark-shell command. From the page, you can see my master and slave service is started.

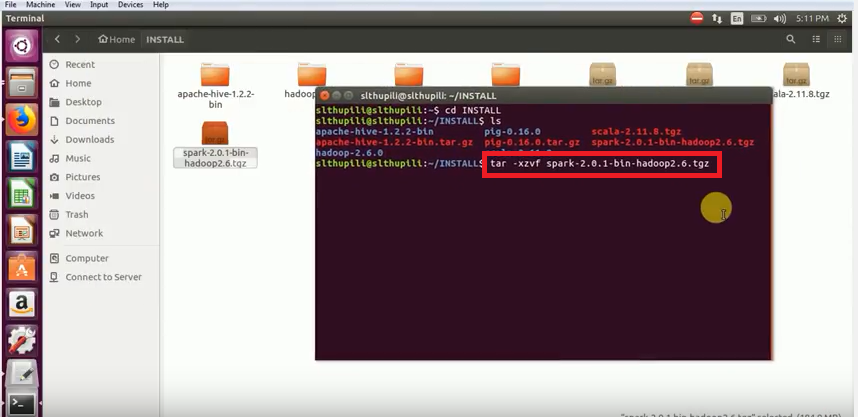

Once the service is started go to the browser and type the following URL access spark page. $ start-workers.sh spark://localhost:7077 Run the following command to start the Spark master service and slave service. Spark Binaries Start Apache Spark in Ubuntu $ source ~/.profileĪll the spark-related binaries to start and stop the services are under the sbin folder. To make sure that these new environment variables are reachable within the shell and available to Apache Spark, it is also mandatory to run the following command to take recent changes into effect. $ echo "export PYSPARK_PYTHON=/usr/bin/python3" > ~/.profile $ echo "export PATH=$PATH:/opt/spark/bin:/opt/spark/sbin" > ~/.profile $ echo "export SPARK_HOME=/opt/spark" > ~/.profile profile file before starting up the spark. Now you have to set a few environmental variables in your. $ sudo mv spark-3.1.1-bin-hadoop2.7 /opt/sparkĬonfigure Environmental Variables for Spark $ tar -xvzf spark-3.1.1-bin-hadoop2.7.tgzįinally, move the extracted Spark directory to /opt directory. Now open your terminal and switch to where your downloaded file is placed and run the following command to extract the Apache Spark tar file.

INSTALL APACHE SPARK ON REDHAT WITHOUT SUDO DOWNLOAD

Alternatively, you can use the wget command to download the file directly in the terminal. 3.1.1) at the time of writing this article. Now go to the official Apache Spark download page and grab the latest version (i.e.

INSTALL APACHE SPARK ON REDHAT WITHOUT SUDO INSTALL

Scala code runner version 2.11.12 - Copyright 2002-2017, LAMP/EPFL Install Apache Spark in Ubuntu To verify the installation of Scala, run the following command.

$ sudo apt install scala ⇒ Install the package $ sudo apt search scala ⇒ Search for the package Next, you can install Scala from the apt repository by running the following commands to search for scala and install it.

INSTALL APACHE SPARK ON REDHAT WITHOUT SUDO HOW TO

If no output, you can install Java using our article on how to install Java on Ubuntu or simply run the following commands to install Java on Ubuntu and Debian-based distributions. Most of the modern distributions come with Java installed by default and you can verify it using the following command. To install Apache Spark in Ubuntu, you need to have Java and Scala installed on your machine. In this article, we will be seeing how to install Apache Spark in Debian and Ubuntu-based distributions. Spark is mostly installed in Hadoop clusters but you can also install and configure spark in standalone mode. It also supports Java, Python, Scala, and R as the preferred languages. Spark supports various APIs for streaming, graph processing, SQL, MLLib.

It is an in-memory computational engine, meaning the data will be processed in memory. Apache Spark is an open-source distributed computational framework that is created to provide faster computational results.

0 kommentar(er)

0 kommentar(er)